With business being conducted across multiple devices and locations, remote work has become the new norm. This widespread access and connectivity also creates a new gap in endpoint protection to significant security threats. The business applications used to connect and communicate with your teams are targeted by new and evolving predatory applications. Current protections are failing to keep up:

Zerify fills this gap by proactively locking down your computer's devices and processes that all business applications use when sharing data. Don't let your guard down - take proactive measures to safeguard your organization.

Your camera, microphone, speakers, keyboard, clipboard, and screen-sharing features are vulnerable to a range of security threats, including predatory programs and keyloggers, to name a few. Leaving any of these unprotected opens the door to malicious attacks that compromise your company's confidential information.

Extend your company's Zero Trust security posture to the endpoint to safeguard against known and unknown threats. With Zerify Defender, you take a proactive approach to cybersecurity. Defender protects:

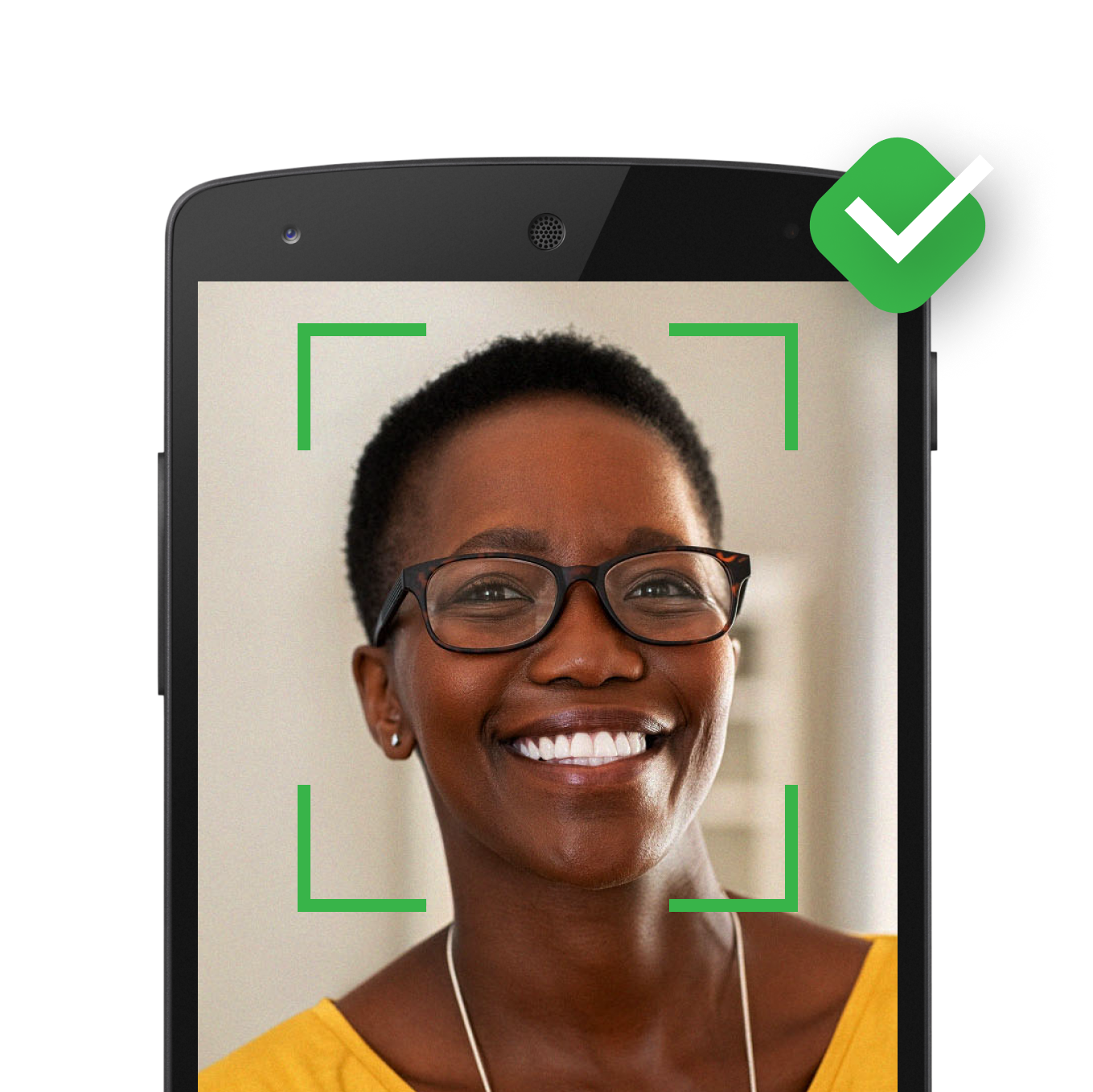

Start Your Trial Now Learn MoreVideo conferencing, screen sharing, and virtual meetings are now essential to organizations. However, security vulnerabilities still exist in these processes. Zerify Meet is the only video conferencing platform built on a Zero-Trust foundation, ensuring strong authentication and access controls at every stage to protect against hacking and intrusions.

![]()

The only “True” Zero Trust secure video conferencing platform.

![]()

Integrate Zero Trust secure video conferencing into your business application.

Learn More

A Zero-Trust strategy effectively ensures that users’ identification is verified each and every step of the way keeping out hackers, spoofers, and emerging new threats. Zero-Trust not only secures your organization from attacks and data breaches, but also provides better visibility into the status of your organization’s devices and services, enables more seamless collaboration between departments, and keeps hybrid teams safe and compliant. Now it’s more critical than ever to have your video conferencing infrastructure comply with a Zero-Trust model.

Meet securely and protect your client’s financial information

Secure your communications with clients and protect against data breaches

Scale your practice, boost efficiency in a secure space

Securely share classified documents in private meetings

Secure student issued devices, avoid costly lawsuits

“Zerify is the only known SecVideo product that will protect user devices from malware taking over cameras, microphones, clipboards, hijacking keyboards, prevent audio-out speakers stream eavesdropping, and provide anti-screen scraping.”

Zerify Meet is the only video conferencing platform in Forrester’s latest research report

Learn More

EDISON, N.J., April 17, 2024 (GLOBE NEWSWIRE) — Zerify Inc. (OTC PINK: ZRFY), a leading…

Read MoreEDISON, N.J., March 07, 2024 (GLOBE NEWSWIRE) — Zerify Inc. (OTC PINK: ZRFY), an industry…

Read MoreEDISON, N.J., Feb. 29, 2024 (GLOBE NEWSWIRE) — Zerify Inc. (OTC PINK: ZRFY), a pioneer…

Read More